RAG vs. Traditional LLMs: Enhancing Knowledge Accuracy in Text Generation

Discover how Retrieval-Augmented Generation (RAG) outshines traditional LLMs by combining dynamic retrieval with text generation for unparalleled knowledge accuracy.

BLOG

Roy

Large Language Models (LLMs) have revolutionized text generation with their ability to produce coherent, human-like responses. However, they face a significant limitation: knowledge accuracy. Traditional LLMs, such as GPT models, are pre-trained on massive datasets and rely on static knowledge. This means they can hallucinate—generate plausible but incorrect information—especially when queried about niche or rapidly changing topics.

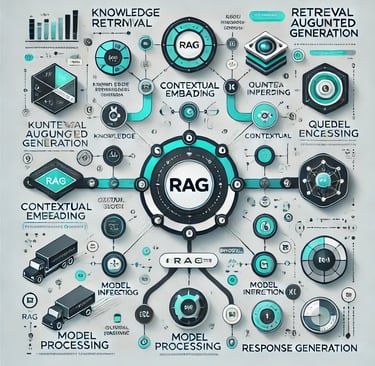

This is where Retrieval-Augmented Generation (RAG) comes into play. RAG combines the generative capabilities of LLMs with an external retrieval system, bridging the gap between text generation and real-time accuracy. Instead of relying solely on the knowledge encoded during training, RAG pipelines fetch relevant information from external databases or documents at query time. This dynamic retrieval process enhances the model's ability to provide accurate and contextually relevant answers.

For instance, in industries like legal or healthcare, where precise and up-to-date information is critical, RAG significantly reduces the risk of errors. By integrating retrieval systems, RAG ensures that the AI’s responses are grounded in verified sources, making it highly suitable for applications demanding factual accuracy.

While traditional LLMs excel in creative writing and conversational tasks, RAG is better suited for knowledge-intensive domains. However, RAG systems are not without challenges, such as increased computational complexity and dependency on high-quality retrievers and databases.

In conclusion, RAG represents a paradigm shift in text generation, addressing the limitations of traditional LLMs by embedding retrieval into the generative process. As AI continues to evolve, RAG is poised to play a pivotal role in applications requiring both creativity and precision, offering a promising path forward in the quest for trustworthy AI systems.

AI430 - Agent HUB For Business Growth

Powering your Growth with our Successful Gen AI Solutions

Email Us

kalpita@ai430.com

© 2025. All rights reserved.