LoRA: Low-Rank Adaptation of Large Language Models(Edward et. al.)

This is a summary of the paper "LoRA: Low-Rank Adaptation of Large Language Models" that proposes a novel method to fine-tune large language models (LLMs) efficiently by introducing low-rank adaptations, significantly reducing computational and storage overhead while maintaining performance.

RESEARCH

Shubhradeep

The paper "LoRA: Low-Rank Adaptation of Large Language Models" proposes a novel method to fine-tune large language models (LLMs) efficiently by introducing low-rank adaptations, significantly reducing computational and storage overhead while maintaining performance. The method addresses the challenges of fine-tuning LLMs, which are computationally expensive and memory-intensive, especially when applied to multiple downstream tasks.

Key Concepts:

Low-Rank Adaptation (LoRA):

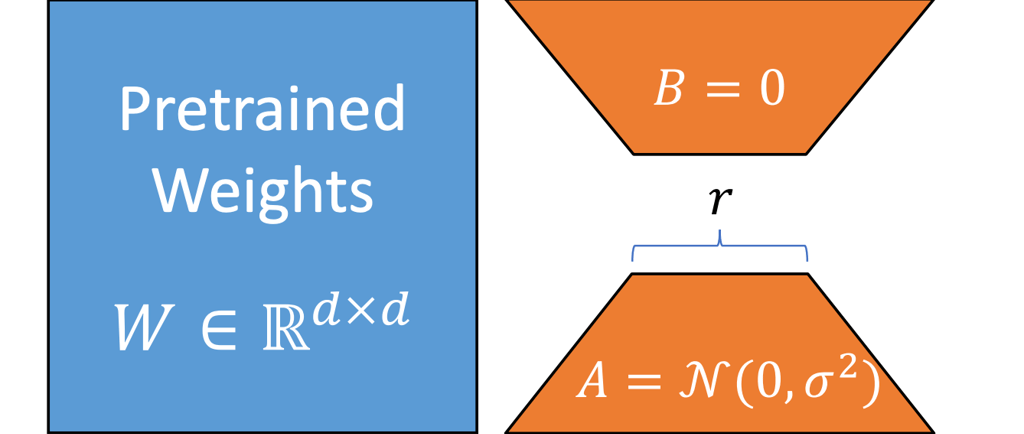

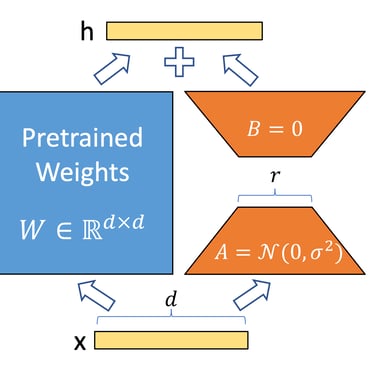

LoRA modifies the weights of pre-trained models by introducing low-rank matrices into the weight update process, avoiding full weight updates.

These low-rank matrices are learned during fine-tuning, while the pre-trained weights remain frozen, preserving the original model.

Efficient Fine-Tuning:

By leveraging low-rank updates, LoRA significantly reduces the number of parameters to be learned and stored, making it highly efficient for adapting large models to new tasks.

Implementation:

LoRA inserts learnable low-rank matrices (rank rr) into the dense layers of the model. During forward propagation, the adaptation matrices are added to the model’s weights dynamically, requiring minimal modifications to the architecture.

Scalability and Generalization:

LoRA's design is modular, allowing the addition of task-specific adapters without interfering with the pre-trained model. It is scalable to very large models and works effectively across diverse downstream tasks.

Results:

The paper demonstrates that LoRA achieves comparable performance to full fine-tuning while drastically reducing the computational requirements. It exhibits state-of-the-art performance on tasks such as machine translation, summarization, and classification, with significant resource savings.

LoRA's efficiency has made it a widely adopted method in adapting LLMs for specific applications, enabling broader access and practical deployment of LLMs in resource-constrained settings.

AI430 - Agent HUB For Business Growth

Powering your Growth with our Successful Gen AI Solutions

Email Us

kalpita@ai430.com

© 2025. All rights reserved.